Secure AI Agents in Production

Crittora Secure is the trust layer that authorizes what agents can do—at the moment they act.

CRITTORA

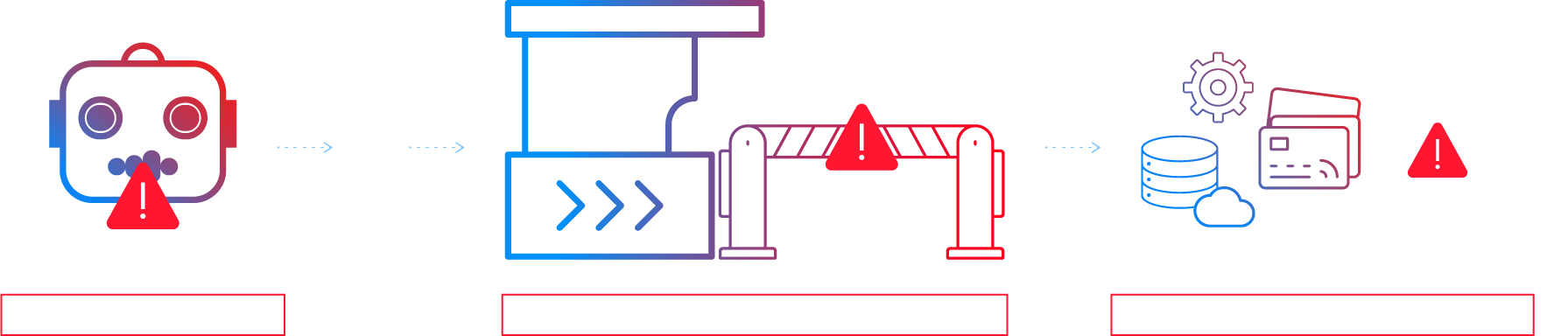

THE MISSING CONTROL BETWEEN AI AGENTS AND REAL SYSTEMS

Crittora Secure is the cryptographic control point between agents and state-changing systems—enforcing explicit, time-bound authority and signed Proof-of-Action receipts for every action.

Why it matters: authority is measurable, enforceable, and auditable when policies are explicit at runtime.

Verify the Agent

Cryptographically verify the actor before execution.

Scope the Permission

Expose only explicitly allowl-isted tools and actions.

Prove every action

Emit signed receipts: what ran, who requested it, and when.

Why Autonomy Stalls

Control breaks at execution

Autonomy fails when tool access is implicit and authority is not enforced at execution time.

Over-permissioned tools:

It can do more than it should.

Untrusted tool boundary:

Requests/responses can be tampered with.

No defensible audit trail:

You can’t prove what really happened.

Execution-time authority gates stop forged calls, replays, and over-broad actions before they commit.

"Everyone says they ‘secure agents.’ Then security asks one question: what actually happens at execution? That’s where most answers fall apart."

-

Agent Security Lead, Fintech

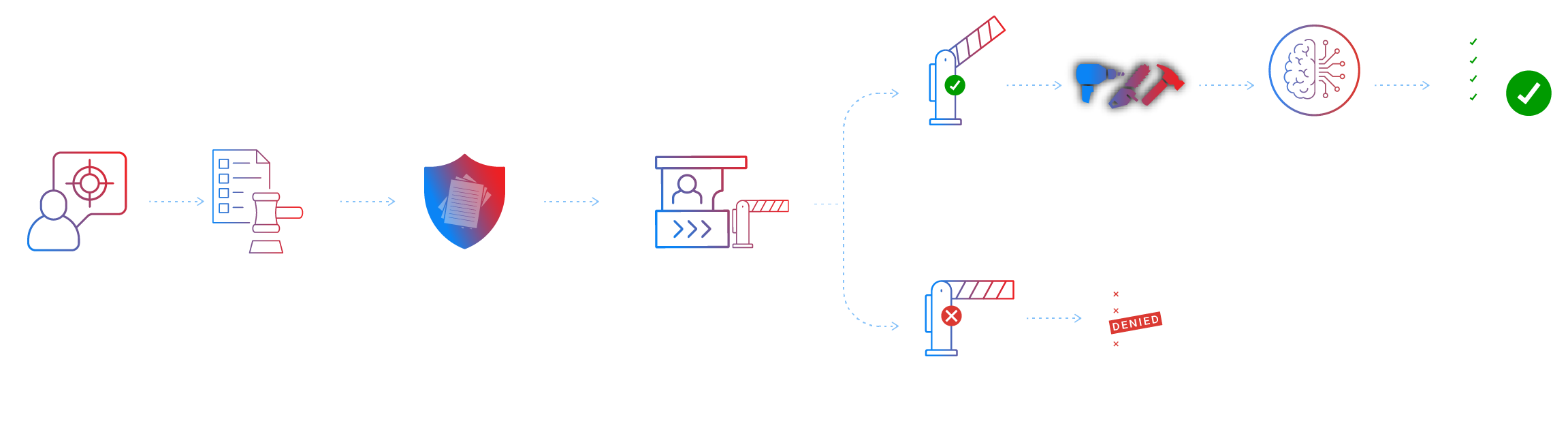

How Crittora Secure Protects the Last Step of AI Execution

Crittora Secure enforces signed, time-bound permission policies at execution, so agents only act when authority is proven.

A hard stop before execution

Every state-changing action hits a mandatory checkpoint at runtime—where identity, scope, and policy are verified before anything is allowed to commit.

Exact authority for a single step

Agents never hold broad or long-lived access. Authority is issued just-in-time for one specific action, bound to the agent, tool, and execution step.

Integrity across the tool boundary

Execution context, inputs, and outputs remain protected as they move across orchestrators, tools, and services—so what runs is exactly what was approved.

Proof that survives after the action

Each approved step produces a signed, portable record tying identity, intent, policy, and request/response together—verifiable even outside the system.

A hard stop before execution

Every state-changing action hits a mandatory checkpoint at runtime—where identity, scope, and policy are verified before anything is allowed to commit.

Exact authority for a single step

Agents never hold broad or long-lived access. Authority is issued just-in-time for one specific action, bound to the agent, tool, and execution step.

Integrity across the tool boundary

Execution context, inputs, and outputs remain protected as they move across orchestrators, tools, and services—so what runs is exactly what was approved.

Proof that survives after the action

Each approved step produces a signed, portable record tying identity, intent, policy, and request/response together—verifiable even outside the system.

Stop unauthorized agent actions.

Agent Permission Protocol

The Execution-Time Authorization Layer for AI Agents

APP verifies a signed, time-bound permission envelope that binds an agent, a specific task, and an explicit set of tool capabilities—before any tool is exposed.

Agent Production Readiness Evaluation

Pass Your Security Review

And Ship Your Agents to Prod

We connect Crittora Secure to a real workflow and surface where forged calls, replayed actions, or standing credentials would have slipped through.

What We Test

State-changing tool calls (APIs, admin actions, automation triggers)

Authority boundaries (scope, expiry, audience binding)

Failure modes (replay, tampering, confused deputy, over-broad tokens)

What You Get

Signed Proof-of-Action receipts for allow/deny

A policy map of tool access by agent/workflow

A short risk summary (blast radius + recommended constraints)

33%

GenAI interactions expected to use action models and autonomous agents by 2028

311B

Web attacks in 2024, a 33% year-over-year increase

37%

Organizations reporting an API security incident in the past 12 months

24%

Breaches where the use of stolen credentials was the top initial access action

FOR DEVELOPERS & AGENT ARCHITECTS

DROP-IN ENFORCEMENT FOR AGENT TOOL CALLS

This is the execution-time authority layer for tool calls, with deterministic verification before any action runs.

Crittora Secure installs in the execution path to verify authority before tools run. Deploy it as a gateway/middleware control, as tool wrappers inside your agent runtime, or via MCP—then emit signed Proof-of-Action receipts for every allow/deny.

Deploy Crittora Secure in front of state-changing APIs and automations (HTTP/gRPC/event consumers). Before a request reaches a system of record, Crittora Secure verifies cryptographic integrity and evaluates the action against explicit scope and expiry. Requests that are out-of-scope, expired, replayed, or tampered fail closed. Every decision yields a signed receipt binding: actor, action, policy, and hashed request/response.

Wrap tool calls inside LangGraph, LangChain, or custom runtimes so the agent only receives a restricted tool surface per step. Tools are exposed only when the step is authorized, and the wrapper emits receipts inline with workflow execution. This is ideal when you want enforcement tightly coupled to orchestration, step boundaries, and agent identity—without changing downstream services.

Expose Crittora Secure controls as MCP tools so any compatible agent runtime can call them without custom integration work. MCP provides the tool interface; Crittora Secure provides verify-before-execute authority enforcement and signed receipts. This is the fastest path when teams are standardizing on MCP and want policy gating and proof artifacts as first-class tools.

Agent Execution Security Review

Questions Teams Don’t Ask Soon Enough

Treat all external content (docs, web, email, tickets) as untrusted input. Put the hard boundary at the execution layer: actions only run when a sealed, time-bounded permission policy authorizes that specific capability for that specific context. This turns prompt injection from "arbitrary actions" into "attempted actions that get denied unless already authorized." Prompt filtering can reduce bad proposals, but it is not an enforceable security control. Execution gating is.

Retrieval should enrich context, not grant authority. The agent can read untrusted text, but it cannot act on it unless a verified policy allows the requested tool/action within scope, TTL, and audience bounds. This prevents hidden instructions from becoming tool invocations by default. If your system relies only on prompt sanitization, you are betting security on text processing and model compliance. Gating is the reliable boundary.

Containment means the agent is constrained to what was explicitly granted: which capabilities, which targets/resources (if limited), which actor/audience, and for how long. If scopes are tight and TTLs are short, injection becomes far less catastrophic. Honest caveat: if you authorize broad access, injection can still cause broad harm inside that authorization. Containment quality is directly tied to how narrow your permissions are.

The model can request more access, but it cannot create it. If the agent tries to use an unapproved capability, the verifier denies it (fail closed). Any additional authority must come from an explicit escalation step that issues a new, time-bounded policy, often with human approval depending on risk. This prevents "the model got tricked" from turning into "the model granted itself admin."

Planning-time checks do not survive retries, replays, or modified requests once an agent is operating asynchronously. In real systems, the only defensible place to enforce authority is at execution, when the tool or API is about to commit a real change. If authorization happens upstream, you are trusting that nothing about the request, context, or intent has changed along the way.